Leveraging the Dark Side: How CrowdStrike Boosts Machine Learning Efficacy Against Adversaries

- Adversarial machine learning (ML) attacks can compromise a ML model’s effectiveness and ability to detect malware through strategies such as using static ML evasion to modify known malware variants

- CrowdStrike improves detection capabilities by red teaming our own ML malware classifiers using automated tools that generate new adversarial samples

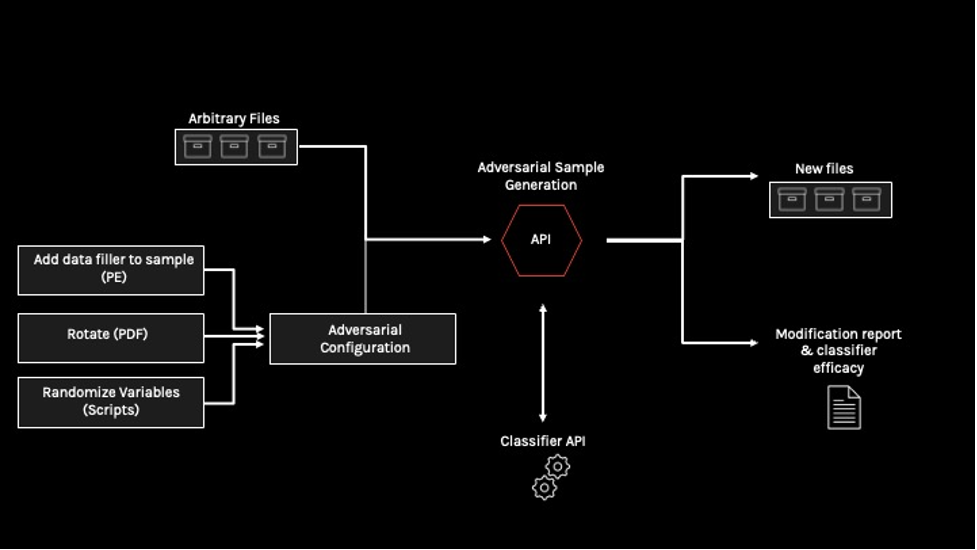

- CrowdStrike’s Adversarial Pipeline can automatically generate millions of unique adversarial samples based on a series of generators with configurable attacks

- Using new, out-of-sample adversarial samples in ML model training data has shown a 19% increase in retention of malware samples at high confidence levels

The power of the CrowdStrike Falcon® platform lies in its ability to detect and protect customers from new and unknown threats by leveraging the power of the cloud and expertly built machine learning (ML) models.

In real-world conditions and in independent third-party evaluations, Falcon’s on-sensor and cloud ML capabilities consistently achieve excellent results across Windows, Linux and macOS platforms. This is especially impressive given ML uses no signatures, enabling the Falcon platform to identify malicious intent based solely on file attributes. The results reflect the effectiveness of CrowdStrike’s multilevel ML approach, which incorporates not only file analysis but also behavioral analysis and indicators of attack (IOAs).

However, ML is not infallible. It is susceptible to adversarial attacks from humans and from other ML algorithms. Examples of the latter include introducing compromised data during the training process or subtly modifying existing malware versions.

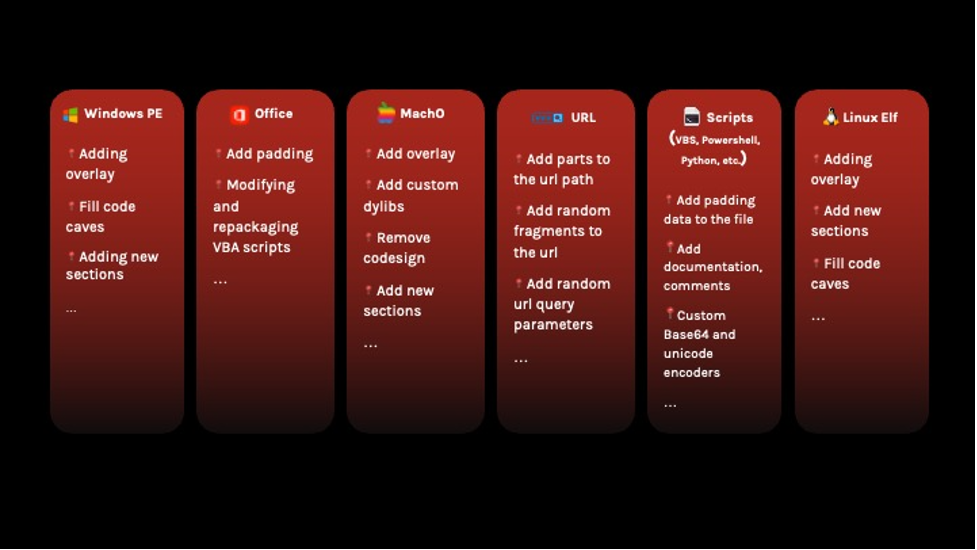

CrowdStrike’s Adversarial Pipeline is a tool that combats one of the most commonly used adversarial ML tactics: static ML evasion. Our research team can use this pipeline to generate a large volume of new and unique adversarial samples using a series of generators with configurable attacks to simulate new and modified versions of known malware. These samples are used to train our ML models to significantly increase their efficacy in detecting cyberattacks that employ static ML evasion.

The industry-leading CrowdStrike Falcon platform sets the new standard in cybersecurity. Watch this demo to see the Falcon platform in action.

The Growing Threat of Adversarial ML Attacks

As recently as five years ago, adversarial ML attacks were relatively rare, but by 2020, the threat had increased to the point that MITRE released its ATLAS (Adversarial Threat Landscape for Artificial-Intelligence Systems) Threat Matrix.

Adversarial ML, or adversarial attacks, involve a broad set of methods to trick ML into providing unexpected or wrong outputs. Some examples are:

- Forcing a classifier to say a picture of a panda is a gibbon by making targeted mathematical modifications to the panda image

- Appending some text extracted from a clean Windows executable to a detected malicious file to fool a classifier into thinking it’s clean

- Breaching and sneaking into the internal network of a company and inserting a few million pictures of traffic lights that are labeled as “duck” into the image database

Attacks can be executed from multiple stages of the model’s development life cycle. Data can be weaponized during training by injecting incorrectly labeled data into the training set, as MITRE states, or during classification by crafting data that would exploit the model (and any others subsequently trained using that corpus). Existing malware can be modified to evade detection by ML models scanning for specific signatures. Not surprisingly, these methods can be chained, combined and merged to increase the effectiveness of the attack. The result of a successful adversarial ML attack is malware that is able to stealthily evade a trained and production-ready ML model.

It’s no wonder the subject of adversarial attacks has gained so much interest across the field of ML, including malware detection, object recognition, autonomous driving, medical systems and other applications. Researchers across the ML spectrum have been working to improve model robustness and detect adversarial attacks based on malicious input.

In security terms, fully undetectable malware is malicious software never before seen in the wild. It therefore cannot be detected by antivirus software that relies on a database of known virus definitions or signatures. Modifying samples of existing malware to achieve fully undetectable malware or to avoid a designated antivirus detection (static or ML-enabled) is one of the oldest tricks in the book used by red teams and attackers.

Small, targeted changes in the analyzed sample can lead to drastically different results in detection efficacy. Static, rule-based detections are also susceptible to this type of attack, which has been successfully applied in the wild by Emotet and other sophisticated threat actors.

A research paper published in March 2022 outlines the challenge in combating this form of adversarial malware:

“The traditional approach — based on analysis of static signatures of the malware binary (e.g., hashes) — is increasingly rendered ineffective by polymorphism and the widespread availability of program obfuscation tools. Using such tools, malware creators can quickly generate thousands of binary variants of functionally identical samples, effectively circumventing signature-based approaches.”

CrowdStrike has stringent processes for protecting our corpus against adversarial ML attacks. Our threat researchers also employ advanced techniques to defend against them. Next, we explore CrowdStrike’s Adversarial Pipeline tool, one method we use to continually enhance detection coverage.

Will the Real Adversarial Generators Please Stand Up?

CrowdStrike improves the detection capabilities of its ML models by essentially red teaming our own classifiers. While dynamic adversarial emulation and other standard methods to exploit ML are employed as part of red teaming, the CrowdStrike Adversarial Pipeline stands out as a unique and highly advanced approach. Designed to be automated and extensible, it allows us to rapidly integrate different attacks described by our own threat research team, or by outside open source researchers (the open source community is booming with methods to evade ML models).

The CrowdStrike Adversarial Pipeline’s architecture consists of different “generators” for the supported file formats with a configurable list of attacks. For example, generators could add to the malware sample an entire megabyte of random English words or sections of clean code without impacting its functionality. These generators work in parallel to provide a flexible tool for generating adversarial samples that can fit into the classifier. The Adversarial Pipeline can support fast generation of millions of unique samples, making it an extremely powerful tool. And, to improve the robustness or stability of ML classifiers, the generated samples are actually executable binaries that can be executed on the Falcon sensor. In contrast, other mathematical methods use perturbation techniques that render sterile adversarial samples.

The Bright Side

The situation may seem grim and stacked against protectors, but the good news is that it’s possible to strengthen each stage of ML model development against adversarial attacks. CrowdStrike threat researchers employ multiple tactics, such as cleaning the corpus, deduplication, adding adversarial samples in the training data and improving feature extraction capabilities.

In addition, because they recognize that static ML is only one layer of defense, CrowdStrike threat researchers use ML on behaviors, IOAs and AI-powered IOAs to provide additional protection layers that in unison are difficult to circumvent. This comprehensive approach to protection is why the CrowdStrike Falcon platform continues to lead the industry, including winning the first-ever SE Labs AAA Advanced Security (Ransomware) Award, achieving 100% ransomware prevention with zero false positives.

See for yourself how the industry-leading CrowdStrike Falcon platform protects against modern threats. Start your 15-day free trial today.

The Value of Generating Adversarial Samples

Inclusion of new, out-of-sample adversarial samples in ML model training data has shown an increase of 19% in retention of malware samples at high confidence levels. It does so while showing little deviation from the original sample decision value, thus limiting the impact of the attack itself.

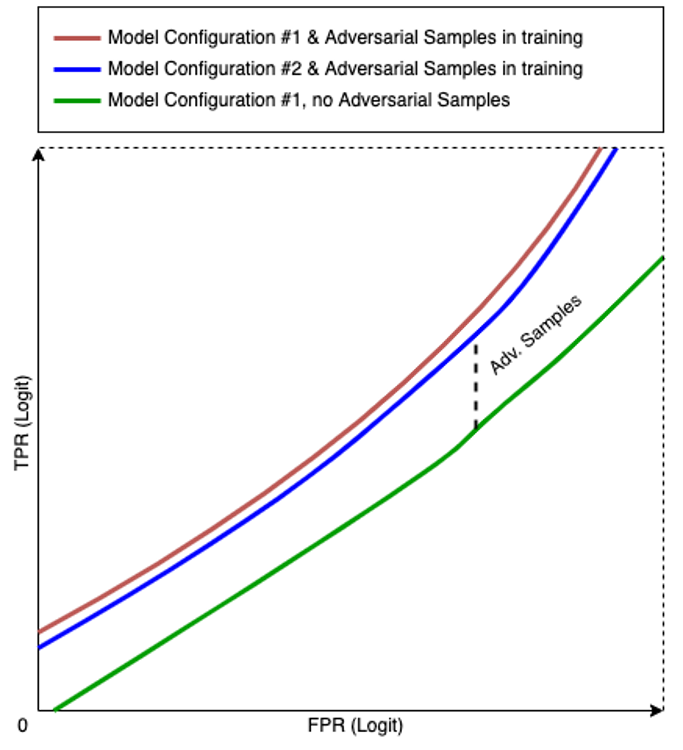

In a subset of data selected to closely mirror characteristics of real-world samples, an experimental detection true positive rate (TPR) of 80% was increased over the course of several steps to 90% at a fixed false positive rate (FPR) through the progressive addition of more adversarial samples in the model training process. This performance improvement was observed not only at a single FPR but across a wide range. A representation of the results is shown in Figure 3.

Figure 3. True positive rate (TPR) performance improvement through the progressive addition of more adversarial samples in the model training process (click to enlarge)

A Mindset for Continuous Research

This research highlights the value of the CrowdStrike Adversarial Pipeline’s ability to generate variations of “new” adversarial samples for enhancing the detection coverage and efficacy of CrowdStrike’s ML models. Using this tool in the model training process ensures that our models continuously increase their effectiveness against adversarial attacks that employ the fully undetectable malware ML strategy.

CrowdStrike researchers constantly explore theoretical and applied ML research to advance and improve detection and efficacy capabilities of our ML models, setting the industry standard in protecting customers from sophisticated threats and adversaries to stop breaches.

Additional Resources

- Read about how machine learning is used in cybersecurity.

- Learn more about the CrowdStrike Falcon platform by visiting the product webpage.

- Test CrowdStrike next-gen AV for yourself. Start your free trial of CrowdStrike Falcon® Prevent next-gen antivirus today.