Five Questions Security Teams Need to Ask to Use Generative AI Responsibly

Since announcing Charlotte AI, we’ve engaged with many customers to show how this transformational technology will unlock greater speed and value for security teams and expand their arsenal in the fight against modern adversaries. Customer reception has been overwhelmingly positive as organizations see how Charlotte AI will make their teams faster, more productive and learn new skills, which is critical to beat the adversaries in the emerging generative AI arms race.

But just as frequently as we hear excitement and enthusiasm, we’re also hearing hesitation and unease regarding the use of generative AI for security use cases — and these sentiments are also well-founded. Already we’ve seen numerous examples of how generative AI is lowering the barrier for new threat actors to conduct sophisticated and scalable attacks, from deep fakes to high-fidelity phishing emails and AI-generated ransomware. With every groundbreaking technology, there are new risks organizations must be aware of and should anticipate. Generative AI is no different. Dr. Sven Krasser, SVP & Chief Scientist of CrowdStrike, recently noted, “Generative AI has its own attack surface”, which introduces critical considerations for how this technology is procured, trained, regulated and fortified against attacks. Already we’re seeing early public policy efforts to drive industry best practices in developing safe and trustworthy generative AI with the White House Executive Order issued on Oct. 30, 2023.

In parallel with emerging regulations, it is imperative that organizations consider key risks, especially when using generative AI in security technology. Some of the most pressing questions security teams should weigh when evaluating generative AI applications include:

- How do we ensure generative AI-produced answers are accurate?

- How do we protect organizations against the new attack surface of generative AI — including data poisoning and prompt injection?

- How do we ensure customer privacy is upheld?

- How do we prevent unauthorized data leakage?

- How will generative AI transform the role of the analyst — and should we all be looking for new jobs?

Beyond these concerns, the heightened sophistication of adversaries and the growing complexity of modern security operations require a generative AI approach that is purpose-built for security teams — one that can also fluently understand security syntax and anticipate analyst workflows.

CrowdStrike is uniquely positioned to lead the security industry as it adopts generative AI, as the AI-native Falcon® platform has been at the forefront of AI-powered detection innovation since its founding. To enable organizations to safely embrace generative AI, we’ve centered both the needs and concerns of security teams in the architecture of Charlotte AI, knowing that the information it surfaces to analysts will ultimately inform their decisions and shape their organization’s risk posture. Culminating from this vision, Charlotte AI delivers performance without compromise: elevating security analysts and accelerating their workflows, all while protecting privacy, auditing for accuracy and enforcing role-based safeguards for maximum safety.

Built for Accuracy: Preventing AI Hallucinations

AI “hallucinations” are inaccurate — occasionally even nonsensical and implausible — answers to user questions. Intrinsic hallucinations occur when the output of large language models contradicts available source content. Extrinsic hallucinations occur when models don’t have access to the information requested by users. When this arises, many generative AI systems create responses that appear plausible but are in fact incorrect.

AI hallucinations in consumer-facing large language model (LLM) products can range from the funny to the farcical — but in security, inaccuracy is no laughing matter. Incorrect security information can have severe consequences for an organization, from interrupted operations to a weakened risk posture to a missed breach. For this exact reason, one of the defining ways Charlotte AI is purpose-built for security teams is that it is first and foremost designed to be truthful. Charlotte AI’s safeguards for accuracy arise from three key areas: 1) how Charlotte AI is using LLMs, 2) the data its models are trained on, and 3) the data its LLMs have access to.

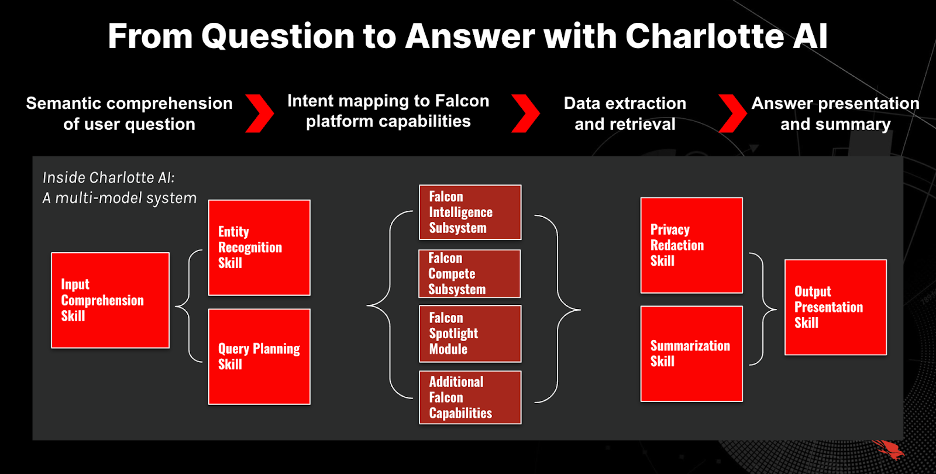

Charlotte AI uses LLMs in two principal ways: first, to semantically understand user questions and identify how to use the Falcon platform to obtain the answers needed, and second, to decide how to present and summarize findings to users. To perform these tasks, Charlotte AI’s LLMs are trained on the product documentation and APIs of the CrowdStrike Falcon® platform. This makes Charlotte AI an expert in the Falcon platform, endowed with the ability to query and extract data from the many modules and APIs of the platform.

We can break down the sequence of skills Charlotte AI applies to answer questions the following way:

Step 1: Identify what information is needed to answer the user’s question

Step 2: Map the information needed to the capabilities of the Falcon platform that are needed and the user can access

Step 3: Retrieve information from the relevant capabilities

Step 4: Structure and summarize findings in an intuitive format

Step 5: Report the sources of information and corresponding API calls included with every Charlotte AI response (available by clicking on the “Show Response Details” toggle)

Figure 1. Sequence of skills Charlotte AI applies for understanding user questions and generating answers using the data of the Falcon platform (click to enlarge)

There are three important benefits of this design:

- Charlotte AI only uses the data from the Falcon platform: Charlotte AI is able to surface the industry-leading telemetry and threat intelligence of the Falcon platform, which is continuously enriched by insight from CrowdStrike’s threat hunters, data scientists, managed detection and response (MDR) teams and incident responders. By focusing Charlotte AI’s data sources on the high-fidelity intelligence contained in the Falcon platform, Charlotte AI has architectural safeguards against data poisoning.

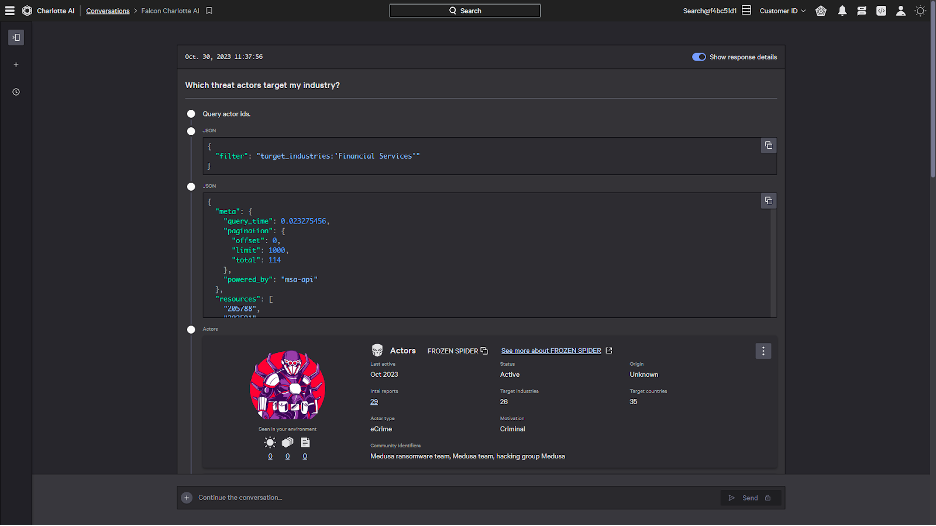

- Charlotte AI’s answers are auditable and traceable: Every answer Charlotte AI provides can be inspected and audited using the “Show Response Details” toggle included with every answer. Moreover, because Charlotte AI can only extract information from what a user has access to, Charlotte AI’s work will also be reproducible by security teams.

Figure 2. Charlotte AI provides users with the option to inspect and audit the source of every answer by selecting the “Show Response Details” toggle (click to enlarge)

- Charlotte AI supports ongoing up-skilling and enables teams to realize the full value of the Falcon platform: As adversaries become proficient in new domains — from attacking endpoints to cloud environments to identities — organizations have expanded their investments to acquire tools to monitor and defend emerging attack vectors. All too often this results in fractured technology stacks of point solutions that create operative blind spots, delay response times and increase operational overhead. To combat this, organizations are increasingly turning to unified, consolidated security platforms, like CrowdStrike Falcon, that can offer integrated visibility and a cross-domain defense. But even with integrated platforms, staying ahead of new features can be a Sisyphean task. As an expert in the Falcon platform’s modules, features and APIs, Charlotte AI will enable teams to continuously maximize the value they get from the Falcon platform — eliminating time spent scanning documentation, minimizing cycles spent editing scripts and erasing any erratic guesswork in navigating the console.

Built for Privacy: Protecting Customer PII

Generative AI technology also raises critical privacy concerns. Users should understand what data — if any — is being shared with third-party entities. Under the hood, Charlotte AI leverages multiple technologies, including models that have been fine-tuned by CrowdStrike as well as various third-party technologies. Charlotte AI routes sensitive data through the technologies of trusted partners, such as AWS Bedrock, rather than through services that leverage user questions to train their models. This enables Charlotte AI to provide customers with the best possible answer to their question within the bounds of CrowdStrike’s privacy guarantees.

Users should also understand what data the large language models in their products are trained on. Charlotte AI uses the product documentation and APIs of the Falcon platform.

Built for Safety: Preventing Unauthorized Data Exposure

Safety concerns around generative AI can surface in the form of internal and external risks. Internally, organizations face risks from multiple fronts: First, how do you prevent unauthorized data exposure within your organization, and second, who is ultimately responsible and accountable for actions taken based on information surfaced by generative AI? Externally, organizations should also consider how their products are protected against adversarial tampering, such as prompt injections or prompt leakages.

Role-based access controls: Charlotte AI respects a user’s existing role-based access policies (RBAC) instituted in their Falcon environment and operates within their role’s assigned privileges. In other words, Charlotte AI cannot access data, modules or capabilities that users don’t already have access to.

The analyst is the authority and is accountable: While humans are often said to be the weakest link in security, CrowdStrike has always held that expert-provided ground truth and analyst context is one of security’s greatest strengths. In an AI-native SOC, the analyst will remain essential in reviewing, deciding and authorizing security operations, informed by the output of generative AI. Herein lies the difference between Charlotte AI’s expertise and the role of the security analyst: Charlotte AI is an expert in the Falcon platform and will enable the analyst to find critical information with speed and proficiency, compressing hours of work into minutes, leveraging the latest functionality and real-time intelligence of the Falcon platform. But ultimately it will fall to analysts to direct Charlotte AI’s tasks and decide on what strategic actions need to be taken in their environments. For instance, if an analyst has identified detections on a host group, Charlotte AI can author a recommended script for use in Falcon Real Time Response (RTR), but it will fall to the user to review the script, save it into Falcon RTR and run it.

Preventing prompt injection and prompt leakage: Prompt injection, a means by which generative AI is fed information to act in a way not originally intended, has raised much attention from security researchers interacting with tools such as Bing’s AI chatbot, Sydney. Researchers have also flagged risks of threat actors uncovering vulnerabilities in user environments by prompting generative AI systems to reveal previously asked questions (known as prompt leakage), exposing analyst investigations or areas of analyst focus. By design, Charlotte AI has several mitigations against these types of attacks. As an interface to a user’s Falcon environment, Charlotte AI can’t access data or capabilities that users don’t otherwise already have access to. Moreover, any changes Charlotte AI makes to a user’s environment must first be reviewed and approved by an authorized analyst, acting as an additional safeguard against prompt injections that attempt to trigger an action.

Responsibly Embracing Innovation

As adversaries reach new heights of attack sophistication with AI, organizations must be equipped to meet them on the battlefield with an equal, if not superior, response. Generative AI has the potential to turbocharge security teams, maximizing their output with both scale and speed. While the risks of generative AI should not impede its adoption by security teams, understanding the risks should inform the decision criteria organizations apply when choosing a security vendor and partner, elevating technology purpose-built by security experts for security practitioners.

- Join the Charlotte AI private preview: Existing CrowdStrike customers should inquire with their account teams to learn more and apply for the Charlotte AI private preview program.

- Learn more about Charlotte AI: Visit the Charlotte AI product page

- Read CrowdStrike’s position on the new U.S. policy for AI.

- Explore the Falcon platform: Learn more about CrowdStrike’s industry-leading technology, services and intelligence.

- Get started today: Experience the Falcon platform in action with a 15-day free trial.