Containerization technology is the backbone of the development and scalability of modern-day applications. As businesses rely on containerized workloads, it’s essential to understand key technologies like Docker and Kubernetes.

Docker is a versatile platform responsible for creating, managing, and sharing containers on a single host, while Kubernetes is a container orchestration tool responsible for the management, deployment, and monitoring of clusters of containers across multiple nodes.

In this article, we’ll explore how containerization technologies like Kubernetes and Docker manage workloads for scalable, resilient, and platform-independent applications. We’ll discuss containers, container runtimes, and orchestration engines. Then, we’ll look at the benefits of using Kubernetes and Docker together.

An Introduction to Containers

Containerization lets engineers group application code with application-specific dependencies into a lightweight package called a container. Containers virtualize operating systems and machine resources, such as CPU and RAM, based on expected consumption. This is why many of today’s distributed applications are built on containers, as each container has its own file system and prevents dependency conflicts by not sharing resources. This differentiates containers from virtual machines, which are digital replicas of a host with its operating system and resources preserved in advance.

Why Use a Container Runtime and Container Orchestration Engines

A container runtime is a software component responsible for managing a container’s lifecycle on a host operating system. It works in conjunction with container orchestration engines to drive a distributed cluster of containers easily and efficiently. For example, Docker is a container technology commonly used alongside the Kubernetes orchestration engine.

Container orchestration engines (COEs) like Kubernetes simplify container management and automate complex tasks that include:

- Auto-scaling containers

- Configuring networks

- Load balancing

- Performing health checks.

COEs ensure efficient operations in the deployment of containerized workloads.

An Introduction to Docker and Kubernetes

Docker

The container runtime is an essential component of Docker, providing the environment to run and manage containers. As a containerization technology, Docker offers a human-readable interface, known as the Docker client, that manages workloads on the host machine by interacting with the container runtime called Containerd.

Docker client has two types:

- Docker compose: Helps manage applications composed of multiple containers and can start or stop them with a single command.

- docker: Manages containers individually to start, stop, or remove them.

Docker also supports other containerization processes:

- Docker images are created using a configuration file called Dockerfile and are stored inside a stateless application (called the Docker Registry). Images can be hosted locally or in the cloud (for example, through DockerHub).

- A mount point is a file system where a directory or file from the host file system is mounted). When starting a container, Docker creates a mount point and sends alerts to the Kernel to allocate the required CPU, memory, disk, and host ports.

Despite its many strengths, Docker is limited when it comes to the following:

- Managing multiple clusters of containers across distributed nodes in a single session

- Bulk operations in a multi-node environment (such as auto-scaling containers).

However, Kubernetes helps overcome these limitations by managing containerized workloads across a cluster of nodes.

Kubernetes

Kubernetes is a powerful orchestration tool for managing containers across multiple hosts using its Container Runtime Interface (CRI). The CRI lets Kubernetes support containerization platforms like Docker to create, delete, and manage containers on the server nodes.

Kubernetes streamlines cluster management by using resource objects, such as deployments, to target a group of application pods (a small cluster of containers) as one unit, allowing for easy scaling of pods or updating of container images with a single command.

Kubernetes provides operators with broader visibility over the cluster by logging at three different levels of granularity:

- Container-level logs are generated capturing stdout and stderr from applications.

- Node-level logs are aggregated from the application’s containers and kept according to the log rotational policy defined for each deployment configuration.

- Cluster-level logs provide deeper insights into Kubernetes components, such as etcd or kube-proxy.

Learn More

This guide will focus on the Kubernetes part of cloud security, discussing the attack surface, security lifecycle, and 12 best practices.

Why Use Kubernetes With Docker?

Kubernetes and Docker are technologies that complement each other. When integrated, the combination of both technologies can yield significant benefits with enhanced capabilities.

- High Availability

- Auto-Scaling

- Storage

- Monitoring Dashboards

High Availability

Docker’s limitation of only managing containers on a single hosted node and being unable to alter the state of servers can be addressed with Kubernetes, which can schedule containers across multiple nodes. Kubernetes deploys a new node when an existing node crashes or becomes unhealthy. By maintaining a balanced workload distribution, this approach ensures high availability at all times.

Auto-Scaling

Kubernetes has built-in support for auto-scaling on server clusters allowing users to define scaling criteria, such as thresholds for multiple metrics (like CPU or RAM utilization). When these thresholds are met (for example, high traffic or high CPU usage), then Kubernetes can automatically scale up the containers. Similarly, it can scale the containers back down when the utilization drops below the thresholds. This helps cost efficiently maintain low latency.

Storage

To address the often-ephemeral nature of containers, Docker provides two modes of persistent storage: volume and bind mounts. However, Kubernetes allows multiple storage integration options by abstracting the storage layer from the containers. This gives us options such as:

- Non-persistent storage

- Persistent storage (such as Network File Systems, Fiber Channel)

- Ephemeral storage (such as emptyDirs and ConfigMaps)

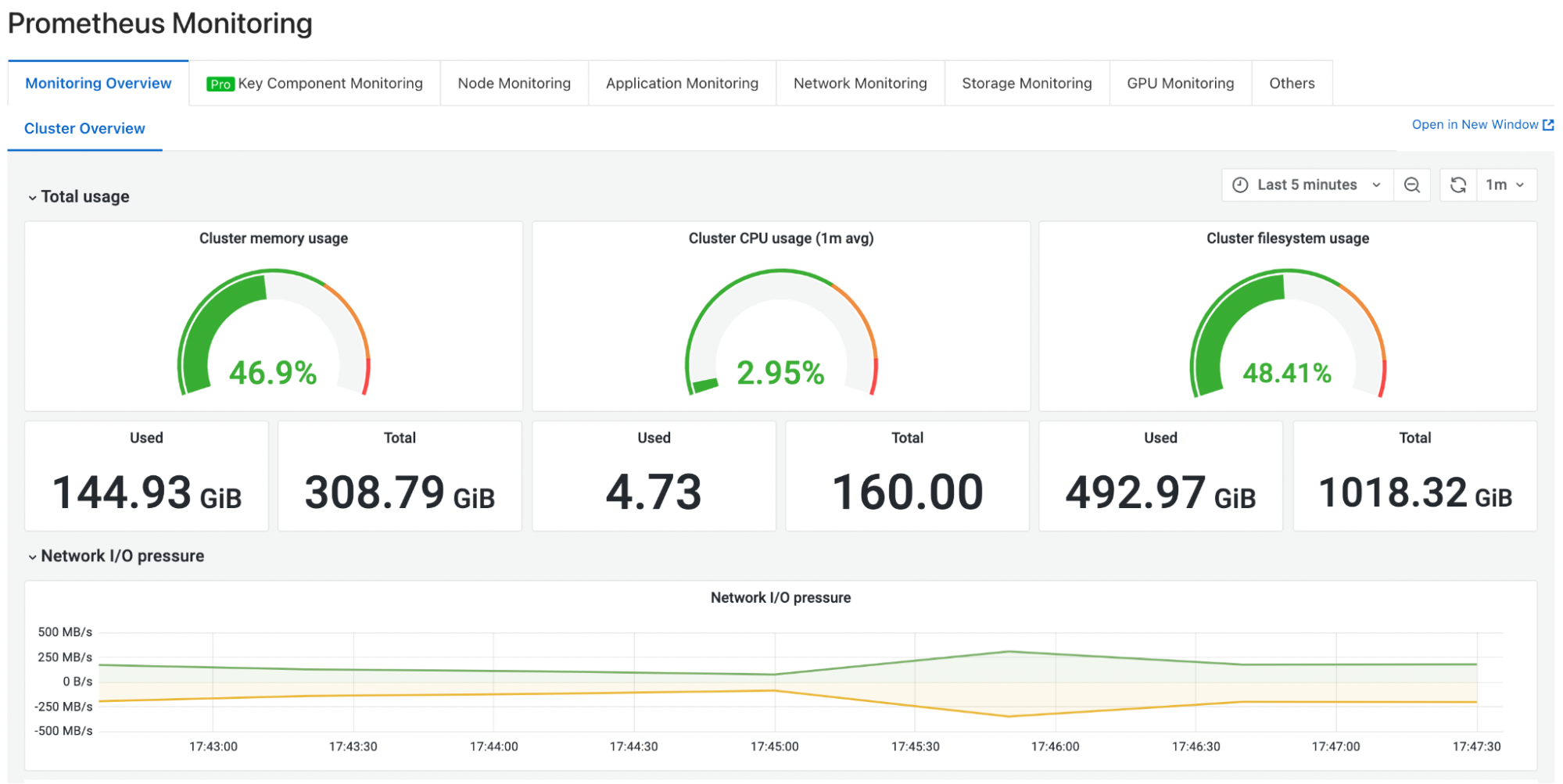

Monitoring Dashboards

Monitoring is now a fundamental aspect of application lifecycle management. Kubernetes supports the integration of various monitoring dashboards to provide robust monitoring and rapid alerting mechanisms for swift incident response. Dashboards and visualizations from Prometheus and Grafana are popular for providing a user-friendly interface with alerts, visibility, and analytics of different metrics across a microservice infrastructure.

Prometheus Dashboard

Prometheus DashboardSummary

Containerization technology has become an essential aspect of modern applications. Container platforms such as Docker and container orchestration engines such as Kubernetes complement each other, working together to simplify container management.

Docker is a lightweight solution that resolves application deployment issues on multiple environments by packaging an application with its dependencies. Kubernetes leverages containerization with its advanced features, enabling wider visibility and control over clusters running complex workloads.

When integrated, Docker with Kubernetes makes deploying and scaling large and complex distributed ecosystems simpler and more manageable.